How to approach an assessment problem?

Humane assessment covers a large spectrum of software engineering problems that require decision making. In order to design ways to approach these problems, we need to understand their nature, and for that we need to first identify their interesting characteristics.

Classically, people characterized problems based on type of analyses being used. Some examples are:

- static vs. dynamic,

- history vs. one version,

- code vs. bytecode, or

- metrics vs. queries vs. visualizations.

These are all important distinctions from a technological point of view, yet they say very little about the overall context for which they should be utilized.

For example, to solve a performance problem, I recently built a tool that grabbed dynamic information from log files. The log files were populated via several means: bytecode manipulation on one part of the system, and direct insertion of logging instructions into another part. The dynamic data was than linked with the static information about the source code. The performance improvements was tracked through the execution history of the same use cases at different moments in time. Furthermore, the tool computed metrics to summarize execution and offered visualizations that aggregated data in various views.

Is this static analysis? Is it dynamic analysis? Is it history analysis? Is it even code analysis? Is it a metric? Is it a visualization? It's none of them, and all of them.

But, does it matter? The distinction between analysis classes is useful for building up communities that develop them or for comparing various technologies, but it is less useful for describing practical problems. In practice, what matters is how these questions are being mapped on existing techniques.

Splitting problems along technological spaces is good for technologists, but it is a limiting proposition for practitioners. Assessment is a human activity, and as such, it is more productive to start from the nature of the problem, rather than it is to focus on the technical nature of the solution.

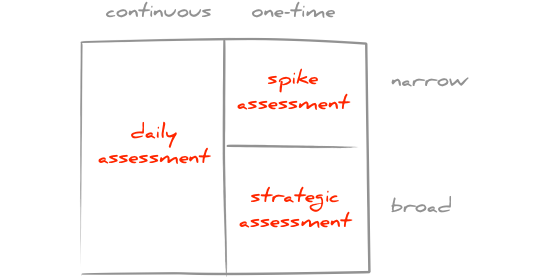

To this end, the simple processes proposed in humane assessment build on two simple questions:

- How often do you care about the problem?

- How large is the scope of the problem?

Daily assessment

If your problem is of continuos interest, you need to look at it continuously, regardless of what the issue is. For example, ensuring that the envisaged architecture is followed in the code is a permanent concern regardless of whether you want to ensure how you use the Hibernate annotations, or whether you want to ensure that your course grained layers are properly separated. The technical part of identifying problems is actually less interesting because the problems have been encountered before. More important is the communication side in this case. Architecture is a group artifact that has to be regulated by the group. This is the focus of daily assessment.

Spike assessment

If your problem has a singular nature, the granularity of the problem plays a significant role. If the problem is clearly defined technically, you need focus on getting to the bottom of it as soon as possible. For example, when you have to fix a bug related to a memory leak, finding the root cause is the single most pressing problem. In this situation, your productivity depends on how fast you can generate hypotheses and check them against the system. The faster you can do that the better your chances are to fix things quickly. This is the focus of spike assessment. Once solved, you do want to check if the lessons you learnt during the spike assessment are of continuous interest. For example, suppose you identified the cause of the memory leak to be a missing release of a resource, you can probably institute a checker for identifying such cases in the future. In this case you may want to make it a concern for daily assessment.

Strategic assessment

If your problem does appear once but the scope is broad, the focus shifts towards identifying the technical sub-problems and synthesizing the findings in order to reach a decision. This is the focus of strategic assessment. For example, a performance problem stated in terms of "the system is slow" cannot be answered technically directly. What does slow mean? Is it an algorithmic speed problem? Is it a scalability problem? How fast should it be? What parts are actually slow? These are the types of questions that have to be clarified before you can start solving the various issues through spike assessments.

Not all problems are created equal. Humane assessment provides a taxonomy to distinguish three classes of problems, and offers dedicated solutions. Based on my experience, these solutions work consistently. However, the taxonomy should be perceived as a starting point rather than the ultimate answer. I am certain that others can find other meaningful ways of categorizing problems.

After all, a discipline matures only when we can understand the problems in the field from multiple points of view. And, assessment is a discipline.